Show it once, and leave the rest to the robot

Team: Zhe Wang (ME), Gordon Li (ME), Tsegab Mekonnen (ME)

Advisors: Chengtao Wen (Siemens), Gabriel Gomes (ME)

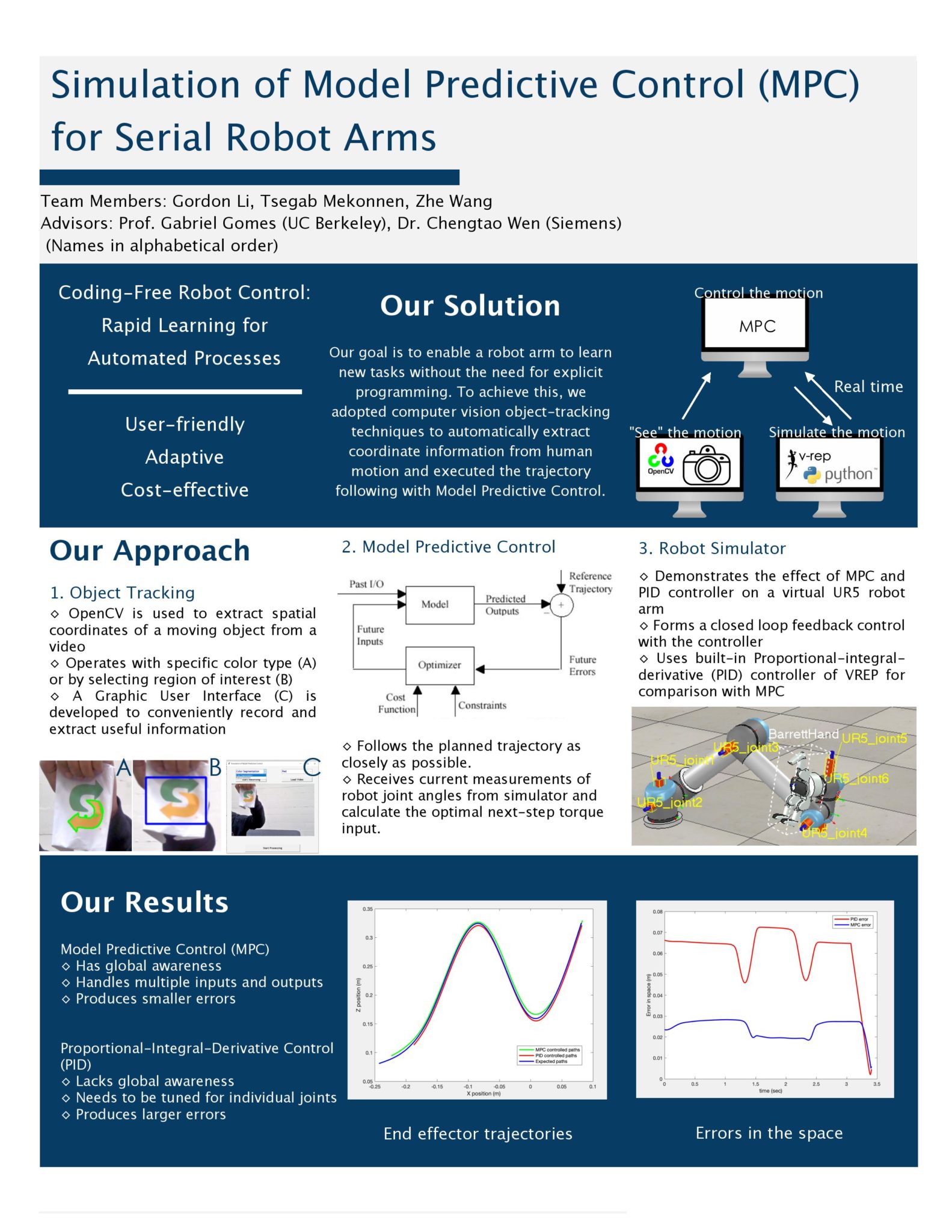

Industrial robot arms are widely used in various fields including manufacturing automation. When these robots need to adapt to a new production task, reprogramming of the control is the key. Our goal is to make this transition process more user-friendly, cost-effective, and adaptive, by enabling a robot arm to mimic human motions without explicit programming. To achieve this, we adopted computer vision object-tracking techniques to automatically record, analyse, and extract useful information from human motion, and execute the desired trajectory with Model Predictive Control.

Solution

Our goal is to enable a robot arm to learn new tasks without the need for explicit programming. To achieve this, we adopted computer vision object-tracking techniques to automatically extract coordinate information from human motion and executed the trajectory following with Model Predictive Control.

Benefits

- User-friendly

- Adaptive

- Cost-effective

Process

First, OpenCV is used to extract spatial coordinates of a moving object from a video, operating with specific color type (A) or by selecting region of interest (B). A Graphic User Interface (C) is then developed to conveniently record and extract useful information.

Model Predictive Control follows the planned trajectory as closely as possible and receives current measurements of robot joint angles from simulator and calculate the optimal next-step torque.

Then, the Robot Simulator demonstrates the effect of MPC and PID controller on a virtual UR5 robot

arm. This forms a closed loop feedback control with the controller and uses built-in Proportional-integral-

derivative (PID) controller of VREP for comparison with MPC.

Results

- Has global awareness

- Handles multiple inputs and outputs

- Produces smaller errors

← View all Capstone Projects